1. The concept of Data Masking

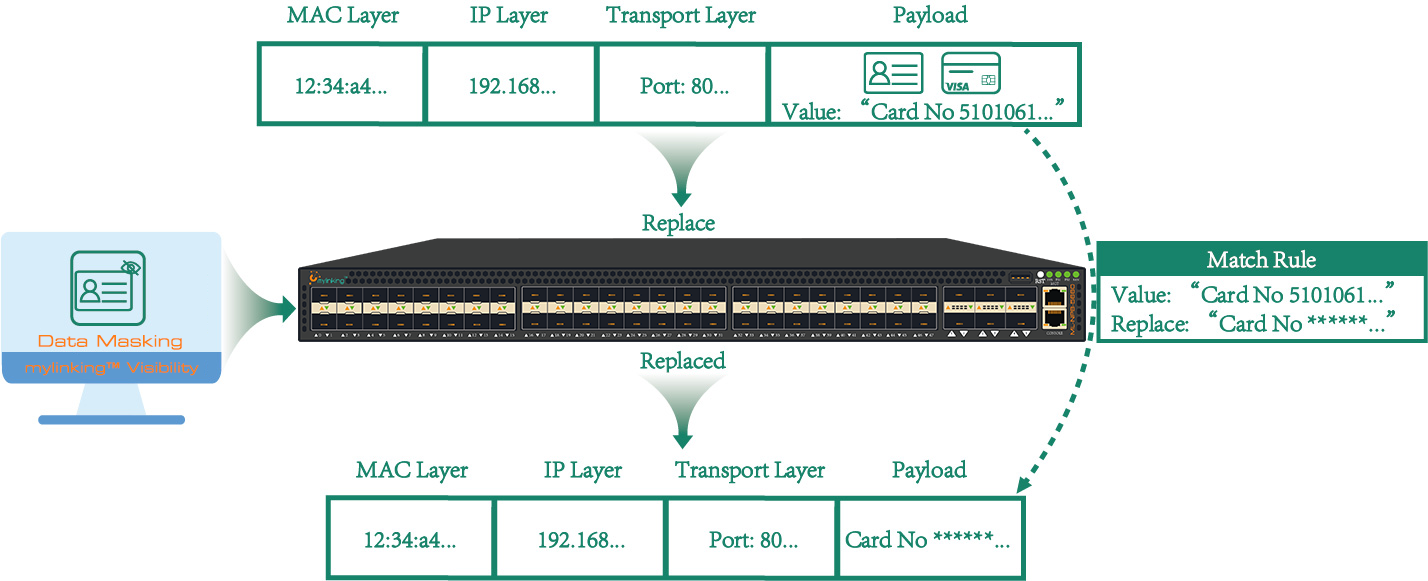

Data masking is also known as data masking. It is a technical method to convert, modify or cover sensitive data such as mobile phone number, bank card number and other information when we have given masking rules and policies. This technique is primarily used to prevent sensitive data from being used directly in unreliable environments.

Data Masking principle: Data masking should maintain the original data characteristics, business rules, and data relevance to ensure that the subsequent development, testing, and data analysis will not be affected by masking. Ensure data consistency and validity before and after masking.

2. Data Masking classification

Data masking can be divided into static data masking (SDM) and dynamic data masking (DDM).

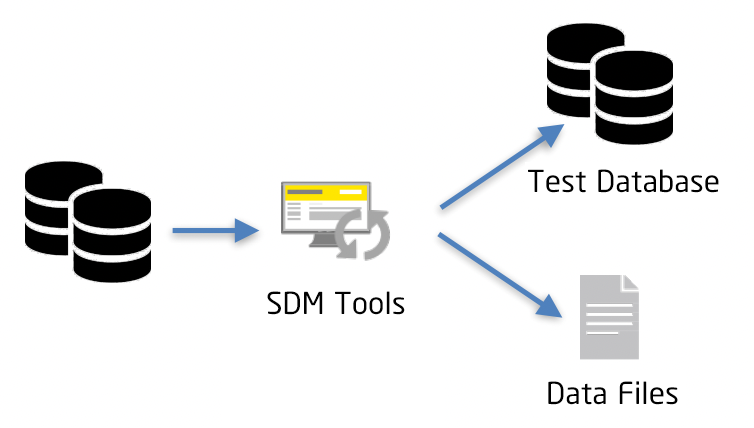

Static data masking (SDM) : Static data masking requires the establishment of a new non-production environment database for isolation from the production environment. Sensitive data is extracted from the production database and then stored in the non-production database. In this way, the desensitized data is isolated from the production environment, which meets business needs and ensures the security of production data.

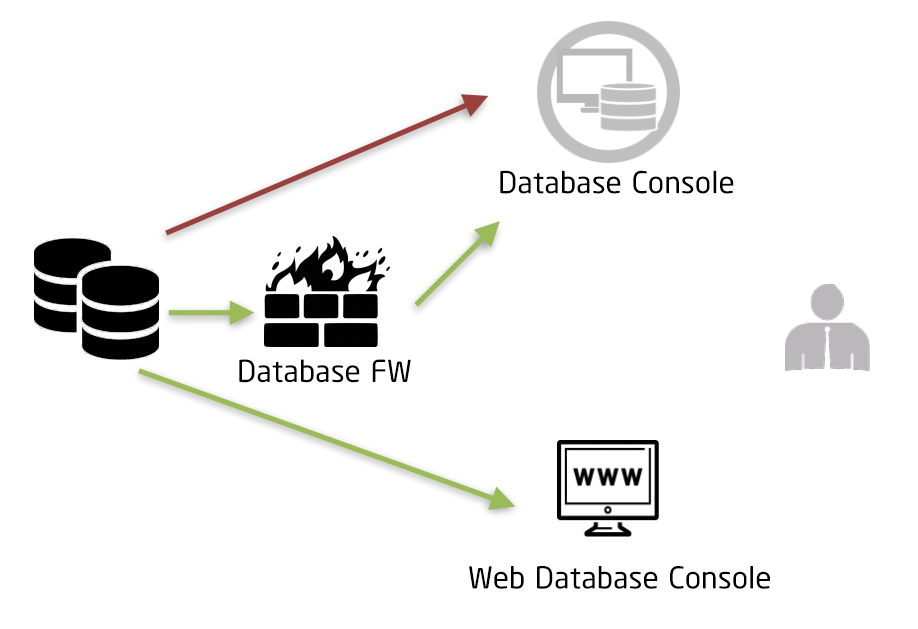

Dynamic Data masking (DDM): It is generally used in the production environment to desensitize sensitive data in real time. Sometimes, different levels of masking are required to read the same sensitive data in different situations. For example, different roles and permissions may implement different masking schemes.

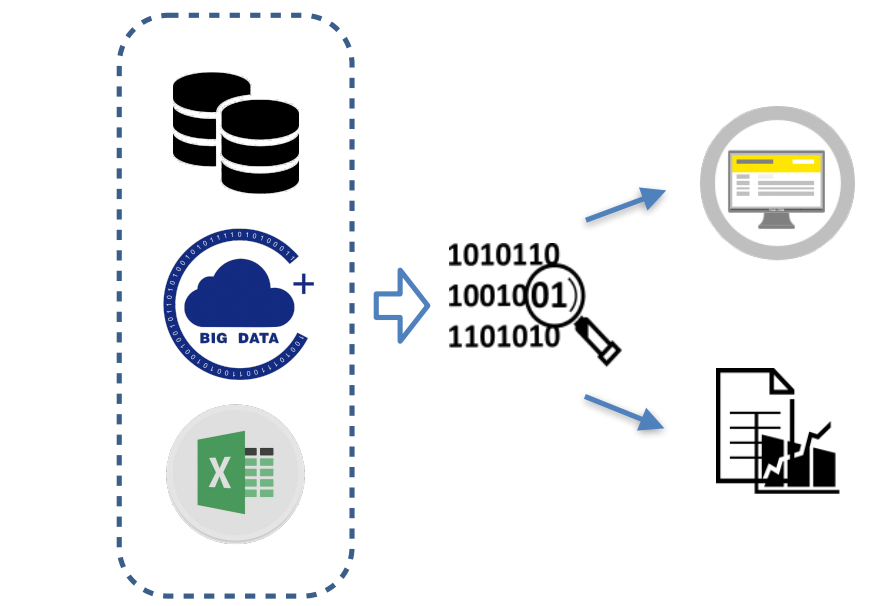

Data reporting and data products masking application

Such scenarios mainly include internal data monitoring products or billboard, external service data products, and reports based on data analysis, such as business reports and project review.

3. Data Masking Solution

Common data masking schemes include: invalidation, random value, data replacement, symmetric encryption, average value, offset and rounding, etc.

Invalidation: Invalidation refers to the encryption, truncation, or hiding of sensitive data. This scheme usually replaces real data with special symbols (such as *). The operation is simple, but users cannot know the format of the original data, which may affect subsequent data applications.

Random Value: The random value refers to the random replacement of sensitive data (numbers replace digits, letters replace letters, and characters replace characters). This masking method will ensure the format of sensitive data to a certain extent and facilitate subsequent data application. Masking dictionaries may be needed for some meaningful words, such as names of people and places.

Data Replacement: Data replacement is similar to the masking of null and random values, except that instead of using special characters or random values, the masking data is replaced with a specific value.

Symmetric Encryption: Symmetric encryption is a special reversible masking method. It encrypts sensitive data through encryption keys and algorithms. The ciphertext format is consistent with the original data in logical rules.

Average: The average scheme is often used in statistical scenarios. For numerical data, we first calculate their mean, and then randomly distribute the desensitized values around the mean, thus keeping the sum of the data constant.

Offset and Rounding: This method changes the digital data by random shift. The offset rounding ensures the approximate authenticity of the range while maintaining the security of the data, which is closer to the real data than the previous schemes, and has great significance in the scenario of big data analysis.

The Recommend Model "ML-NPB-5660" for the Data Masking

4. Commonly used Data Masking Techniques

(1). Statistical Techniques

Data sampling and data aggregation

- Data sampling: The analysis and evaluation of the original data set by selecting a representative subset of the data set is an important method to improve the effectiveness of de-identification techniques.

- Data aggregation: As a collection of statistical techniques (such as summation, counting, averaging, maximum and minimum) applied to attributes in microdata, the result is representative of all records in the original data set.

(2). Cryptography

Cryptography is a common method to desensitize or enhance the effectiveness of desensitization. Different types of encryption algorithms can achieve different desensitization effects.

- Deterministic encryption: A non-random symmetric encryption. It usually processes ID data and can decrypt and restore the ciphertext to the original ID when necessary, but the key needs to be properly protected.

- Irreversible encryption: The hash function is used to process data, which is usually used for ID data. It cannot be directly decrypted and the mapping relationship must be saved. In addition, due to the feature of the hash function, data collision may occur.

- Homomorphic encryption: The ciphertext homomorphic algorithm is used. Its characteristic is that the result of ciphertext operation is the same as that of plaintext operation after decryption. Therefore, it is commonly used to process numerical fields, but it is not widely used for performance reasons.

(3). System Technology

The suppression technology deletes or shields data items that do not meet privacy protection, but does not publish them.

- Masking: it refers to the most common desensitization method to mask the attribute value, such as the opponent number, ID card is marked with an asterisk, or the address is truncated.

- Local suppression: refers to the process of deleting specific attribute values (columns), removing non-essential data fields;

- Record suppression: refers to the process of deleting specific records (rows), deleting non-essential data records.

(4). Pseudonym Technology

Pseudomanning is a de-identification technique that uses a pseudonym to replace a direct identifier (or other sensitive identifier). Pseudonym techniques create unique identifiers for each individual information subject, instead of direct or sensitive identifiers.

- It can generate random values independently to correspond to the original ID, save the mapping table, and strictly control the access to the mapping table.

- You can also use encryption to produce pseudonyms, but need to keep the decryption key properly;

This technology is widely used in the case of a large number of independent data users, such as OpenID in the open platform scenario, where different developers obtain different Openids for the same user.

(5). Generalization Techniques

Generalization technique refers to a de-identification technique that reduces the granularity of selected attributes in a data set and provides a more general and abstract description of the data. Generalization technology is easy to implement and can protect the authenticity of record-level data. It is commonly used in data products or data reports.

- Rounding: involves selecting a rounding base for the selected attribute, such as upward or downward forensics, yielding results 100, 500, 1K, and 10K

- Top and bottom coding techniques: Replace values above (or below) the threshold with a threshold representing the top (or bottom) level, yielding a result of "above X" or "below X"

(6). Randomization Techniques

As a kind of de-identification technique, randomization technology refers to modifying the value of an attribute through randomization, so that the value after randomization is different from the original real value. This process reduces the ability of an attacker to derive an attribute value from other attribute values in the same data record, but affects the authenticity of the resulting data, which is common with production test data.

Post time: Sep-27-2022